Oct

8

Breakthroughs in Photonic Computers

October 8, 2013 | Leave a Comment

A research team led by University of Colorado-Boulder (UC) researcher Miloš A. Popović, an assistant professor of electrical, computer and energy engineering, developed a new technique that allows microprocessors to use light, instead of electrical wires, to communicate with transistors on a single chip. The team is a collaboration of the Massachusetts Institute of Technology and Micron Technology Inc.

The breakthroughs could allow for the trajectory of exponential improvement in microprocessors that began nearly half a century ago – known as Moore’s Law – to continue well into the future, allowing for increasingly faster electronics, from supercomputers to laptops to smartphones. The developing system could lead to extremely energy-efficient computing and a continued skyrocketing of computing speed into the future.

Popović and his colleagues created two different optical modulators – structures that detect electrical signals and translate them into optical waves – that can be fabricated within the same processes already used in industry to create today’s state-of-the-art electronic microprocessors.

The modulators are described in a recent issue of the journal Optics Letters.

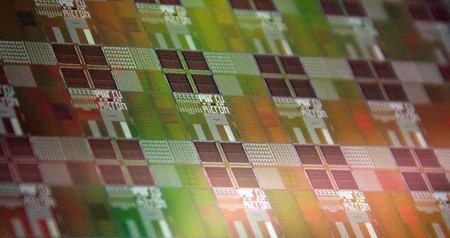

Optical Modulator Chips will be extremely energy efficient for computing and continue skyrocketing of computing speed into the future. Image Credit: University of Colorado at Boulder. Click image for the largest view.

Back to Moore’s Law that predicted in 1965 that the size of the transistors used in microprocessors could be shrunk by half about every two years for the same production cost, allowing twice as many transistors to be placed on the same-sized silicon chip. The net effect would be a doubling of computing speed every couple of years.

Moore’s projection has held true until relatively recently. While transistors continue to get smaller, halving their size today no longer leads to a doubling of computing speed. It’s because the limiting factor in microelectronics is now the power that’s needed to keep the microprocessors running. The vast amount of electricity required to flip on and off millions of tiny densely packed transistors causes excessive heat buildup.

Popović explains, “The transistors will keep shrinking and they’ll be able to continue giving you more and more computing performance. But in order to be able to actually take advantage of that you need to enable energy-efficient communication links.”

Moreover microelectronics also are limited by the fact that placing electrical wires that carry data too closely together can result in “cross talk” between the wires.

In the last half-dozen years, microprocessor manufacturers have been able to continue increasing computing speed by packing more than one microprocessor into a single chip to create multiple “cores.” But that technique is limited by the amount of communication that then becomes necessary between the microprocessors, which also requires hefty electricity consumption.

Popović says using light waves instead of electrical wires for microprocessor communication functions could eliminate the limitations now faced by conventional microprocessors and extend Moore’s Law into the future.

Photonics, a form of optical communication circuits, have two main advantages over communication that relies on conventional wires: Using light has the potential to be brutally energy efficient, and a single fiber-optic strand can carry a thousand different wavelengths of light at the same time, allowing for multiple communications to be carried simultaneously in a small space and eliminating the cross talk.

Popović explains optical communication isn’t new; it’s already the foundation of the Internet and the majority of phone lines. But to make optical communication an economically viable option at the tiny scale of microprocessors, the photonics technology has to be fabricated in the same foundries that are being used to create the microprocessors. Photonics have to be integrated side-by-side with the electronics in order to get “buy-in” from the microprocessor industry.

“In order to convince the semiconductor industry to incorporate photonics into microelectronics you need to make it so that the billions of dollars of existing infrastructure does not need to be wiped out and redone,” Popović said.

Last year, Popović collaborated with scientists at MIT to show, for the first time, that such integration is possible. “We are building photonics inside the exact same process that they build microelectronics in,” Popović said. “We use this fabrication process and instead of making just electrical circuits, we make photonics next to the electrical circuits so they can talk to each other.”

In two papers published last month in Optics Letters with UC-Boulder postdoctoral researcher Jeffrey Shainline as lead author, the research team refined their original photonic-electronic chip further, detailing how the crucial optical modulator, which encodes data on streams of light, could be improved to become more energy efficient. That optical modulator is compatible with a manufacturing process – known as Silicon-on-Insulator Complementary Metal-Oxide-Semiconductor, or SOI CMOS – used to create state-of-the-art multicore microprocessors such as the IBM Power7 and Cell, which is used in the Sony PlayStation 3.

The researchers also detailed a second type of optical modulator that could be used in a different chip-manufacturing process, called bulk CMOS, which is used to make memory chips and the majority of the world’s high-end microprocessors.

Vladimir Stojanovic, who leads one of the MIT teams collaborating on the project and who is the lead principal investigator for the overall research program, said the group’s work on optical modulators is a significant step forward.

“On top of the energy-efficiency and bandwidth-density advantages of silicon-photonics over electrical wires, photonics integrated into CMOS processes with no process changes provides enormous cost-benefits and advantage over traditional photonic systems,” Stojanovic said.

Let’s hope the microprocessor foundries “buy in”!